The Microsoft Mixed Reality Hackathon

For the last 30ish days, Microsoft has been running a Mixed Reality Hackathon and encouraging people to experiment with either their MRTK (Mixed Reality Toolkit) or their relatively new code first Mixed Reality library StereoKit giving participants a chance of winning some of the $17,500 dollars prize pool!

After looking at the code first nature of StereoKit and what can be achieved with very little code, I opted to try StereoKit.

It also helps that I can use the StereoKit library straight from inside Visual Studio with C# code and deploy straight to my Oculus Quest 2 headset (which was just gathering dust) in a matter of seconds.

The brief for the hackathon was "A New Way to Solve an Old Problem" which was fortunately not too prescriptive and gave sufficient scope for interpretation and creativity.

I found the StereoKit library easy to use as it has out the box considerations for mixed reality experiences (for example controller and hand gesture interactions).

That said, even though the StereoKit documentation and samples are extremely good, I still had to ask a few questions in the GitHub discussions area and Discord channel which were swiftly answered by StereoKit maintainers and community contributors. Thank you!

One of the reasons for MS running the hackathon was for this very reason - to get feedback from developers and identify where documentation or samples can be expanded and better explained.

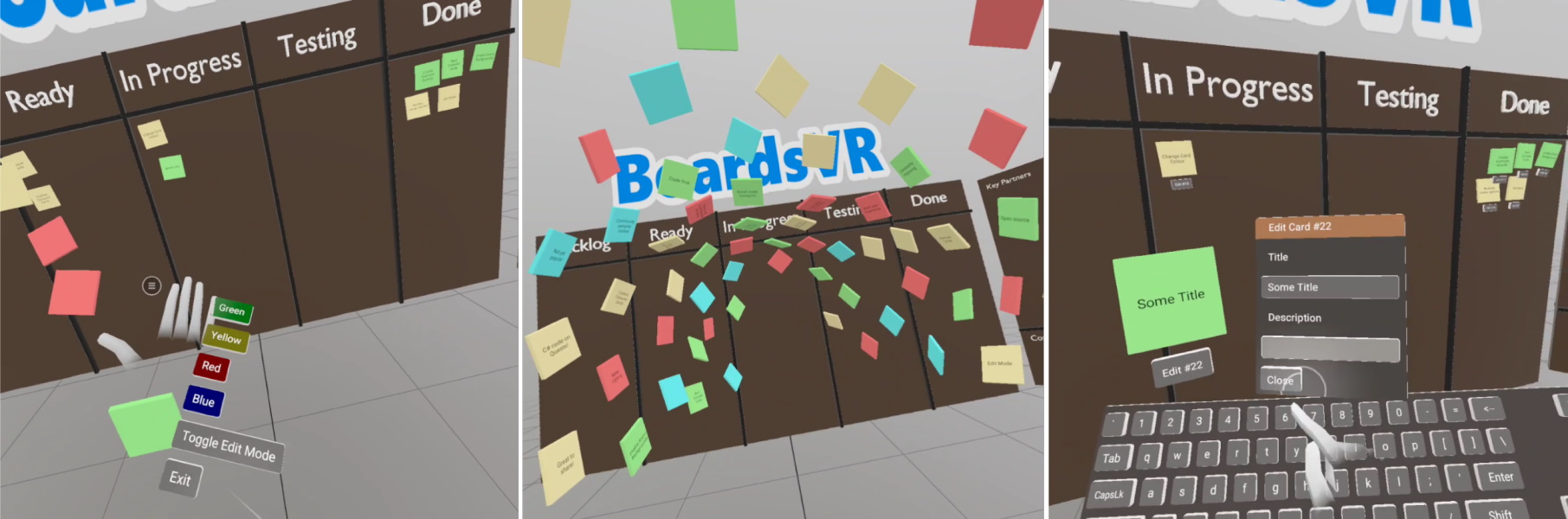

What I created - BoardsVR

In the same way that you can use post-it notes and coloured cards on whiteboards IRL, I decided to see if I could create a coloured card system and a variety of board templates in VR. Hence BoardsVR was born (and BoardsVR.com registered to help fuel my domain registering problem).

The main features I wanted to initially include were pretty basic to create a POC

- Multi coloured cards

- Editable card text

- Re-positionable cards

- Multiple board templates (created in Blender)

- Multiple background environments

And I think the end result is quite nice given the relatively little effort and code involved. I am also led to believe that the same code could be deployed to a HoloLens and "just work" in much the same way it does in VR, but in AR.

View The Demo Video

The hackathon called for a 5 minute (max) video to be submitted, demoing and explaining the project, so here it is below. Please be kind and remember, it is just a hackathon project.

Sorry it is a bit cringy! (but I had fun making it). I recommend watching full screen with the sound up!

BoardsVR.com

As mentioned, i've posted a bit of information about the hackathon project at BoardsVR.com including the intro/demo/explanation video, links to the hackathon and GitHub repo.

Summary

I have succeeded in quenching my creative curiosity and implementing my idea in StereoKit, learning some of its capabilities at the same time. Already having skills in Augmented Reality and ARKit, it is nice to add StereoKit and VR to my toolbelt of immersive experiences.

I think StereoKit has a bright future as:

- It can be deployed to Oculus Quest 2 (a popular VR headset) and very quickly

- It can be used straight from Visual Studio and doesn't need Unity

- You create your app in C#

- It is code first

- The library footprint is small

- The library syntax is concise and easy to use

And as it is built on top of OpenXR, it will be supported by an increasing number of devices in the future. I hope Microsoft continue to invest in the continued improvement and (more importantly) longevity of StereoKit.

As the war of XR platforms is heating up, it is important for MS to provide a variety of XR options for developers in the Microsoft ecosystem.

This is one of the most enjoyable hackathon projects I've done in a while. Please watch the video and enjoy the funky dance music.

-- Lee