If you've read part 1 (the introduction) of this series of posts you'll know what we are trying to achieve. If you haven't read it yet, I suggest you go and read it. It won't take long.. I'll wait.

Right. Ready?

In this post, I will describe how the following was achieved.

Image Recognition with Xamarin ArKit

So we are going to start by trying to do some simple image recognition.

It's assumed that the reader is already aware of how to deploy a Xamarin IOS Single View Application to their device. If not, I will cover how to get started with this in another post (as it is too long a process to include in this tutorial).

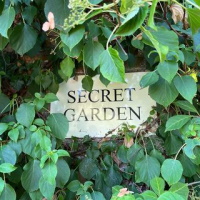

Firstly, we need to choose an image to recognise.

I chose a nearby book cover. I actually got the image from Amazon.

- In Visual Studio for Mac, in an existing Single View App that you have deploying to your phone double click on the Assets.xcassets folder

- Click the green plus icon and create a New AR Resource Group. I called mine 'AR Resources'

- Inside that create a New AR Resource Image. I called mine ARImage

- Select the newly created AR Resource Image and double click the image placeholder on the right hand side to choose the image from your computer that you want to be recognised in the screen and set the Size to be the dimensions you expect the image to be in real life.

In the actual ViewController, in the ViewDidAppear overrides method, you'll see below that we get the detection images and add them to the SceneView Sessions ARWorldTrackingConfiguration DetectionImages property.

Note: Don't worry too much about the MaximumNumberOfTrackedImages property at the moment.

Then in the ViewController I am using a custom ARSCNViewDelegate called SceneViewDelegate

Which looks a bit like this..

In it we are..

- Checking the DidAddNode method and checking if the anchor is an ARImageAnchor

- Determining which image was detected (we only set one anyway remember)

- Create a new Plane and set its width and height to be the same as the detected images

- Declare a blue material

- Create a new Scene Node and set the Geometry of that node to be the Plane we just created.

- Give the node the blue material and set the planes opacity to 90% so it's a little transparent

- By default I believe the plane node would be perpendicular to the detected anchor image, so we use some maths to rotate it to be parallel to the detected actor image.

- Then finally add the new Plane node to the existing node

And that is all that is needed to overlay a semi transparent plane over a detected image anchor in the augmented reality scene as the video at the top of this post shows.

In the next post we will look at placing a more complex 3d model on the detected 2d image anchor.

-- Lee